What I learned from testing the latest trend…

We have heard a lot about ChatGPT since the start of the year, and it has also become the latest craze in the email marketing world. I wanted to jump right into AI. However, before I use anything with clients, I always look deeply at the technology for myself. Here’s some information on my journey, including testing I did comparing the performance of AI to human copy.

These were my assumptions about using AI:

- Using an AI tool would save me time.

- Adding AI to the process would result in a considerable improvement in performance. The metrics were not terrible to begin with, but I thought I would see some crazy increases thanks to AI.

- AI tools would write better copy than I could and provide more creative subject lines and content (This assumption was a source of controversy when I mentioned it on a recent OI-members-only Zoom Discussion, which is what motivated me to take another look at the AI tests I had run to date and the results, hence this blog post).

I have used AI to create copy in a couple different situations:

- Website Content

- Email Subject Lines

- Email Subject Line and Body Copy

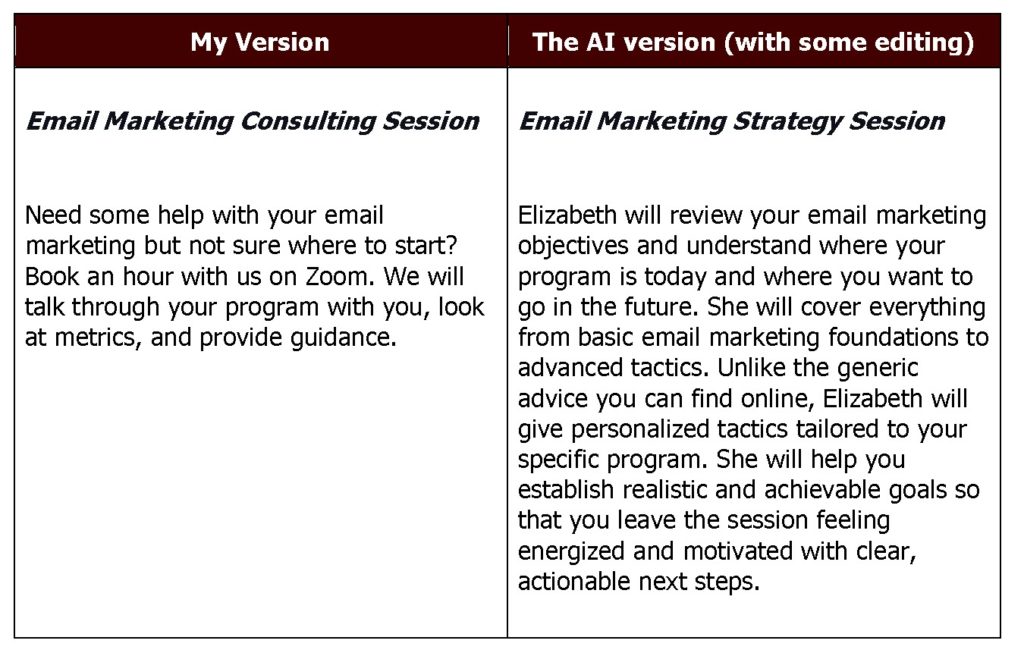

1. Website Content

I used AI to help rewrite the description of a strategy session offering on my website. I put the original copy in the AI prompt, then asked it to improve it.

I loved the copy that AI provided. I needed to tweak things a bit to put it in my voice, but just a little.

It’s anecdotal (since I didn’t do a formal A/B split test), but I have sold more strategy sessions since the description was updated.

I think these results are significant. My hypothesis for why the AI copy won is that the AI version did a better job of selling my expertise and telling prospective clients exactly what they would gain from investing in the session with me.

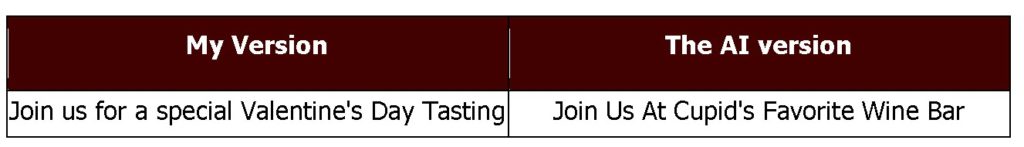

2. Subject Line Test

I used AI to generate subject lines based on the content I had written for clients’ email messages. I put the full body copy of the email into the AI prompt, then asked the AI to provide subject lines. In this specific case the email was inviting subscribers to a Valentine’s Day wine tasting.

The subject line I wrote was pretty direct; the AI version was more fun.

For this test, open rates were 1.9% higher for the AI version. However, the AI version’s click rate was 42% lower than my version. So, who won? My version or the AI version? When you test, you need to choose a key performance indicator (KPI) as far down the funnel as you can, and for this specific client in the restaurant industry, click rate is the endpoint in the metrics I can track, so my version won.

Why do I think my version won? The directness of mine, while not exciting, provided an accurate description of the body of the email. So when they opened the email, the content was what they were expecting. The AI subject line was more fun, so more people opened the email. But the content didn’t necessarily deliver on the promise in the subject line, so fewer people clicked.

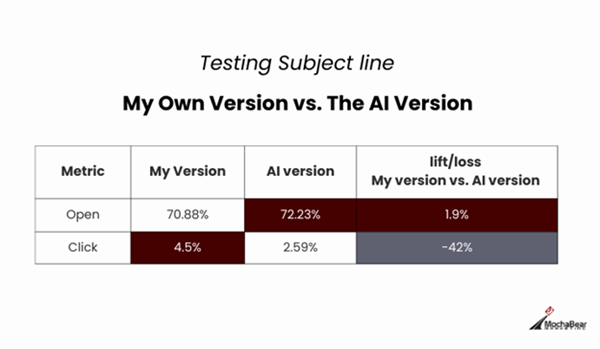

3. Subject Line & Email Content Test

The second test was my subject line and my content against AI versions of each. I used my content and asked the AI tool to write a second version. In this specific case it was a newsletter so the content was long and the goal was appointment booking, not selling an actual product. I also then took the AI content and asked that it generate a subject line based on that specific content.

Similar to the last test I thought the AI content had more pizazz and was asking for the meeting in a slightly more fun way.

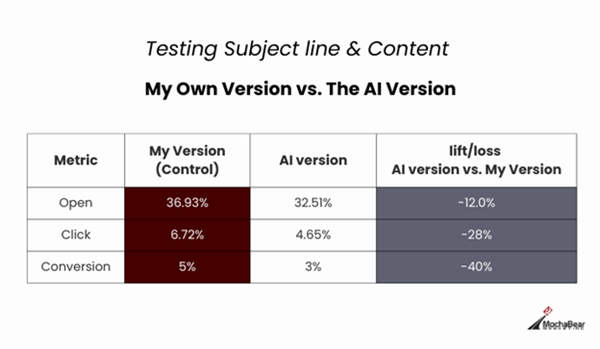

Here is what the test showed:

The AI version had an open rate 12% lower than my version, and a click rate 28% lower than my version. But we use the conversion rate as our KPI, since it’s farthest down the funnel. The AI’s conversion rate was 40% lower than the version I created. So once again, Elizabeth, for the win!

Why mine won

My theory on why my version performed better is that I understand the client’s brand and have analyzed the metrics for this client on every campaign. I know precisely which of their campaigns have performed well and where others fell short, so when I wrote my content, I again came with more directness and less fun than the AI version, but clearly, that is what worked for this specific audience.

Conclusion

So does that mean that AI doesn’t work in improving metrics for email marketing?

So my fellow OI-members who were surprised that I assumed the AI tool could do it better than me were correct. In both cases above my content outperformed the AI content. As these AI technologies improve they will help generate emails faster which is a good thing but they don’t replace an email marketer.

The bottom line is that you need to know your audience, test, and look at all metrics. I am still using AI to generate both the subject line and content, BUT I am only using it as a starting point for generating ideas as I understand the client’s audience better than these tools do. Over time, I do think this will help to improve metrics in email campaigns, but it can’t just be a copy and paste from AI.

Editor’s note: Elizabeth will be leading a discussion on this article on this week’s OI-Members-Only Zoom Discussion, Thursday, April 20, 2023, from 12:00 Noon to 1:00 PM ET. OI Members, check the OI discussion list for an email with the link. Not a member of OI? Join here ($200/year) and then you’ll be eligible to join the weekly Zoom discussions and more!